Add Service Monitor#

SAAP gathers the base metrics to see how our pods are doing. In order to get application-specific metrics (like response time or the number of reviews or active users etc) alongside the base ones, we need another object ServiceMonitor. It will let Prometheus know which endpoint the metrics are exposed to so that Prometheus can scrape them. And once Prometheus has the metrics, we can run queries on them (just like we did before!) and create shiny dashboards!

Objectives#

- Configure ServiceMonitor objects to gather application-specific metrics, such as response time and number of reviews, alongside the base metrics for better performance analysis.

Key Results#

- Enhance observability by implementing robust monitoring and metrics collection for applications within SAAP.

- Enable developers to analyze and interpret the performance and behavior of their applications through metrics visualization.

Tutorial#

Now, let's add the ServiceMonitor for our stakater-nordmart-review-api application.

-

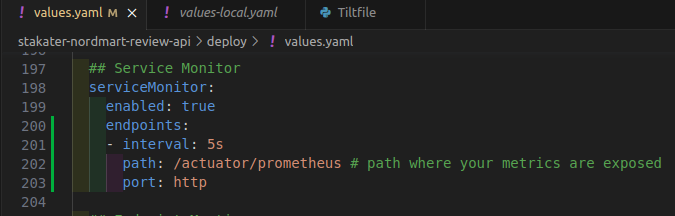

Open up

stakater-nordmart-review-api/deploy/values.yamlfile. Add this YAML in yourvalues.yamlfile.## Service Monitor serviceMonitor: enabled: true endpoints: - interval: 5s path: /actuator/prometheus # path where your metrics are exposed port: httpIt should look like this:

Note

The indentation should be application.serviceMonitor.

-

Save and run

tilt upat the root of your directory. Hit the space bar and the browser withTILTlogs will be shown. If everything is green then the changes will be deployed on the cluster. -

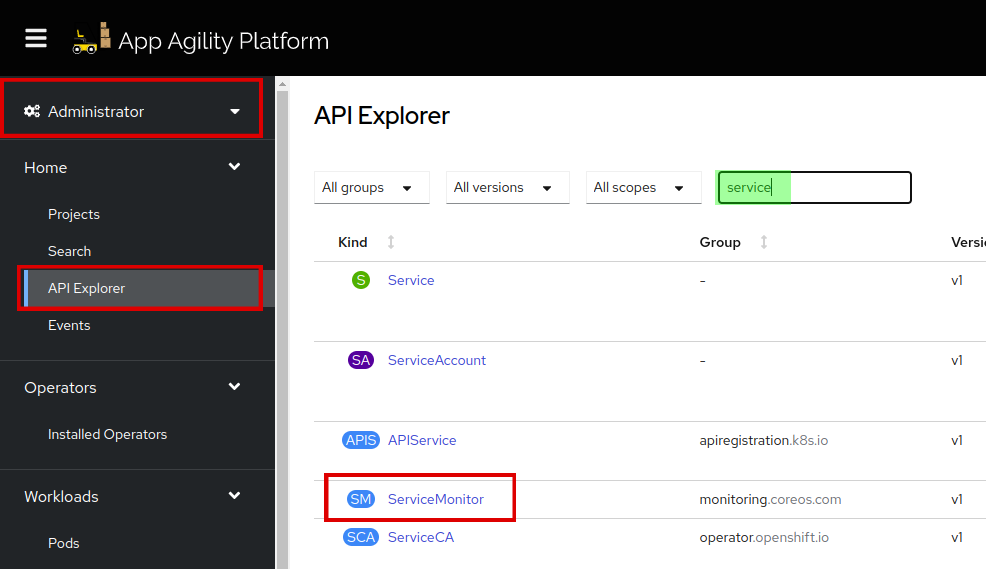

To find

serviceMonitorin SAAP, first login with your credentials, go toAPI Explorer, filter by service, and findServiceMonitor:Click on

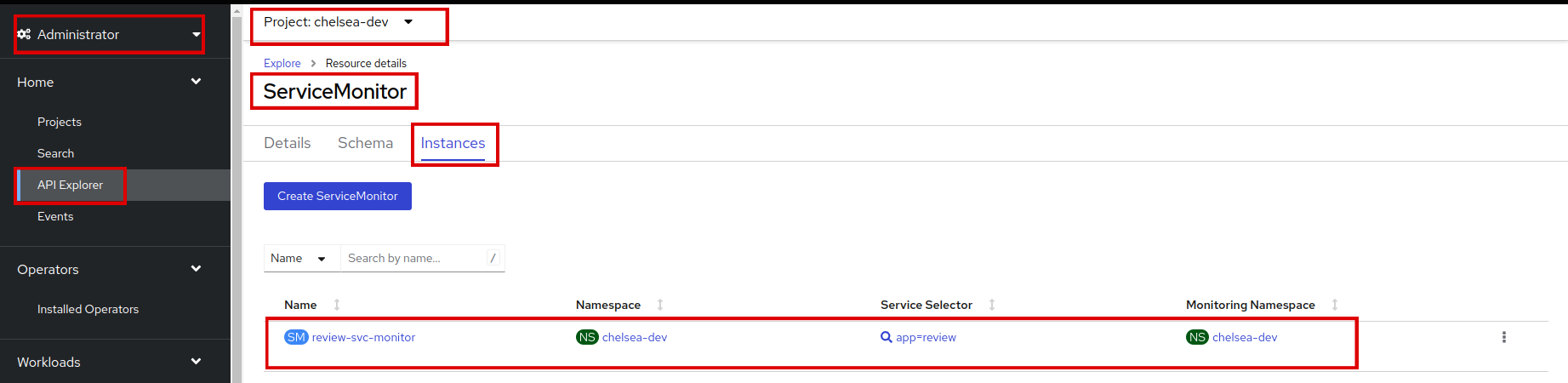

ServiceMonitorand go toinstances:

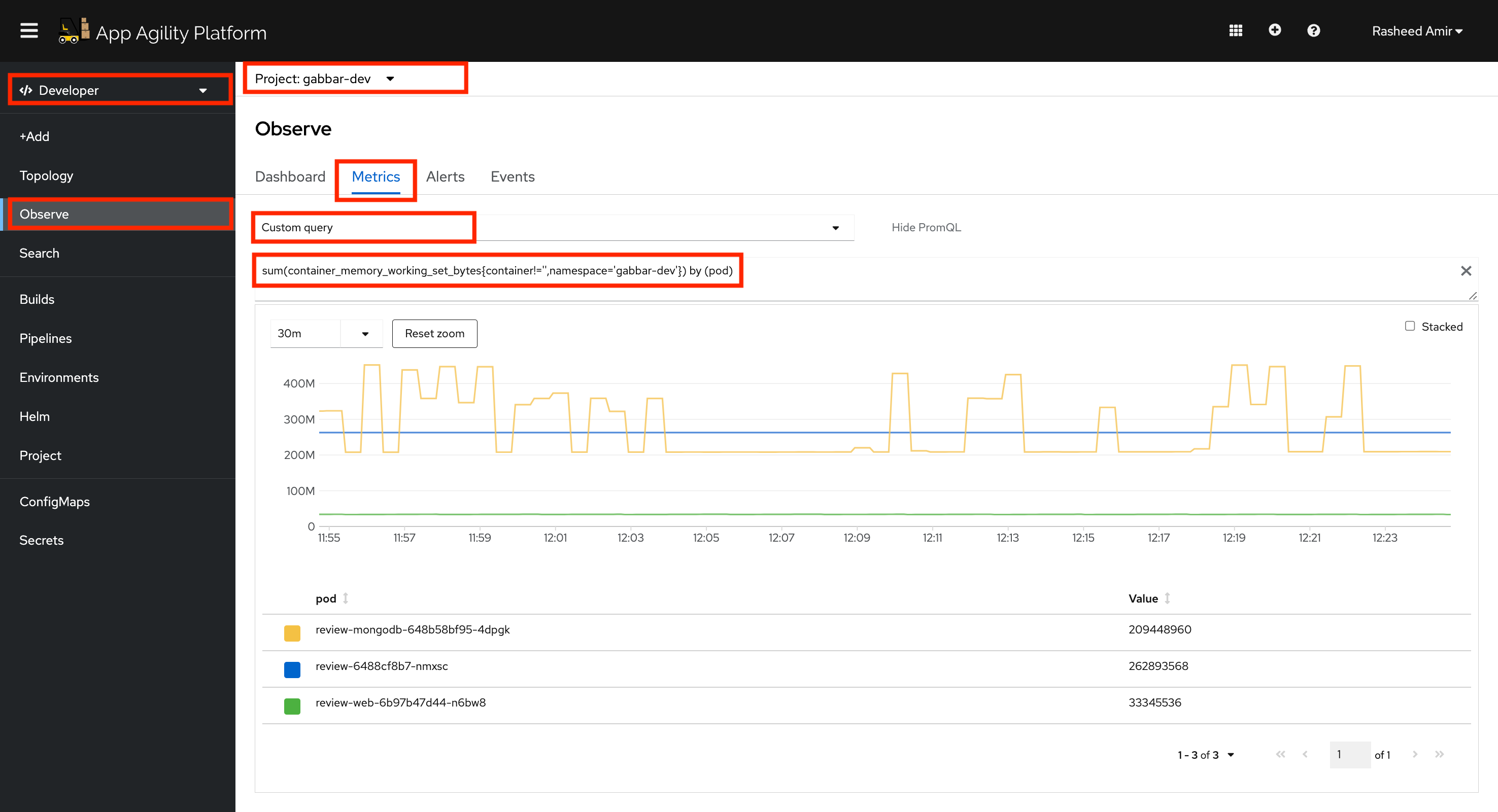

You can run queries across the namespace easily with promql, a query language for Prometheus. Run a promql query to get some info about the memory consumed by the pods in your <your-namespace> namespace/project.

-

Go to Developer > Observe > Metrics. Select

Custom queryand paste the below query. Make sure to replaceyour-namespacebefore running it. Press Enter, and see the metrics for your application specifically.

Voila, you successfully exposed metrics for your application!

Move on to the next tutorial to see how to trigger alerts for your application.

Troubleshooting#

If something doesn't work here are some commonly useful things to check:

- Start by doing curl on localhost from within the pod with the metrics path to make sure that metrics are exposed.

-

Verify everything in spec in the ServiceMonitor.

- Verify that endpoints port matches with the Service

spec.ports.name. - Verify that

namespaceSelectormatchnamesmatches with the Servicemetadata.namespace. - Verify that selector

matchlabelsmatches with the Servicemetadata.labels.

- Verify that endpoints port matches with the Service

-

Make sure that the service monitor selector and service monitor namespace selector labels have been applied to the service monitor and the namespace respectively where the service monitor is present. You can check these selectors in Search -> Resources -> Prometheus and then check the YAML manifest of Prometheus CR.

-

If nothing else works then start with port-forwarding the Prometheus pod to port: 9090 and navigate to Prometheus UI with http. Check in the service discovery that service monitor is picked up, also check the targets that Prometheus is able to scrape the metrics. If the target is visible, but metrics are not scraped then the error should be visible in targets.